The Savvy Survey #6a: General Guidelines for Writing Questionnaire Items

Introduction

The first five publications in the Savvy Survey Series provide an introduction to important aspects of developing items for a questionnaire. This publication gives an overview of important considerations to make when creating questionnaire items, such as:

- types of information that can be collected;

- parts of a question; and

- appropriate text and wording choices.

However, this will only be a brief overview. There are a number of resources that provide in-depth information on developing surveys that may also be useful during the construction phase (Dillman, Smyth, and Christian, 2014; de Leeuw, Hox, and Dillman, 2008; Groves et al., 2009).

Building Items for the Questionnaire

Throughout the development of a questionnaire, it is important to remember that a successful survey should retrieve complete and accurate information from those being surveyed. One way to accomplish this is through careful construction of the items within the instrument. There are a number of steps that a surveyor can use to help optimize the respondents' answers while enhancing the quality of responses and the reliability of the instrument itself.

Types of Information

First, the surveyor must have a clear idea of what needs to be asked. A questionnaire should have a specific purpose and direction. The instrument needs to be focused on collecting very specific information from those being surveyed. Questionnaires can be used to collect several types of information:

- Knowledge—what people know about a topic

- Attitudes—what people feel about a topic

- Beliefs/Perceptions—what people think or understand about something

- Behaviors—what actions people engage in

- Aspirations—what people hope or plan to do

- Attributes—characteristics people have

Several types of information can be gathered in a questionnaire; however, the question types that are used will differ based on the information that is being sought. One way to focus this process is to use the well-defined objectives established for the survey. Objectives can be constructed using the program's logic model (see Savvy Survey #5).

Types of Questions

Once a clear picture of the desired information is in place, the questionnaire can be crafted to elicit that information from respondents. There are two major question types that can be used in a questionnaire, and each collects certain types of information:

- Open-Ended Questions—a blank answer space provided for a description of explanation, a list of items, numbers, or dates

- Closed-Ended Questions—response choices provided (scale, ordered, unordered, or partial)

Each question type has unique characteristics that may be of use within a survey. Additional information about these two questions types can be found in Savvy Survey #6b and #6c, respectively.

For example, if the surveyor is attempting to gain in-depth information about the reasons people have used the Cooperative Extension Service in the past year, but does not have access to an exhaustive list of potential reasons, the use of an open-ended question would be more appropriate than a closed-ended question that provides a pre-determined set of choices. On the other hand, there are times when an exhaustive list of choices for a particular question can be generated and provided for the respondent to choose from. In those instances, a closed-ended question is more appropriate. Before discussing the types of questions that can be used, it is important to understand the individual parts that make up a questionnaire item.

Parts of a Question

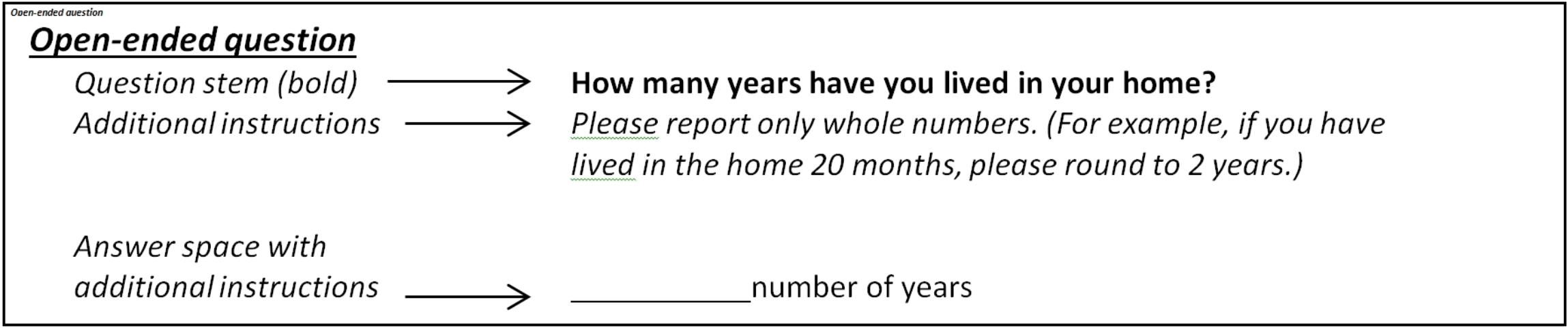

Items in a questionnaire should be constructed in a way that the person being surveyed can adequately interpret what is being asked, recall the relevant information, and then provide a complete and accurate response. There are three main parts of a question (see Figure 1 for an example of these parts within an open-ended question):

- The question stem

- Any additional instructions

- Answer spaces or choices

The most important part of any questionnaire item is the stem, because it is the part that actually asks for information. A well-written stem provides a request for information (e.g., how many years have you lived in your current home).

It may also be appropriate to include additional instructions that the respondent might need in order to most accurately answer the question. These instructions are included after the stem and use some sort of font change to visually set them apart from the stem (see Figure 1). Instructions are used to provide guidance on how the person should frame their response (e.g., number of years).

Finally, it is important to identify where and how the respondent should provide their answer. This may be either a line or box for open-ended questions, or places to mark in a closed-ended question (see Savvy Survey Series #6b and #6c for further information on these question types).

Issues to Consider While Constructing Survey Items

With the basics of question construction made clear, it is time to move on to the process of constructing the items for the survey. There are several issues that must be considered throughout this process, each having a different impact on the reliability (dependability) and validity (truthfulness) of the survey results (Dillman et al., 2014).

- What type of survey mode(s) will be used to ask the question?

- The various survey modes (in-person, group-administered, mail-based, telephone, online, mixed-mode) present the items in a questionnaire to the survey taker through different channels (auditory versus visual).

- In more auditory modes (e.g., telephone), the meanings and use of words have increased power, and memory issues are a significant factor since visual cues are unavailable.

- In more visual modes (e.g., mail-based), the visual design elements must also be taken into account in addition to word choice.

2. Is/Are the question(s) being repeated from another survey?

- If the answer is YES, will the answers be compared to previously collected data?

- If yes, then usually no changes or only minimal changes can be made to the question(s) and/or visual layout without affecting the reliability and validity of the instrument.

- If the answer is NO, then, again, changes can be made to fit a particular scenario,

- but note that the new instrument will need to be analyzed to determine its own levels of reliability and validity.

3. Will respondents be willing to answer accurately?

- Sometimes, survey takers are hesitant to honestly answer questions asking about sensitive topics. This avoidance is especially evident when dealing with activities that may be perceived as embarrassing or, to a lesser extent, with attitudes and behaviors that are seen as "socially desirable" (i.e., the survey taker gives the response that they believe the survey creator wants them to give instead of providing a truthful response).

- If it is crucial to gain sensitive information, there are some methods for encouraging survey takers to respond, though reluctance may still occur.

- Change the wording—instead of asking Have you ever improperly disposed of harmful pesticides?, encourage responses by asking Please explain how you dispose of pesticide containers once you have finished with them.

- Combine with less objectionable questions—encourage responses by nesting the objectionable question (Please explain how you dispose of pesticide containers once you have finished with them?) into multiple, less objectionable questions (How often do you use pesticides on your lawn? What types of pesticides do you commonly use? How do you store surplus amounts?).

- Assure confidentiality—explain steps taken to make sure no individual responses can be identified or reported. Describe the manuscripts that will be generated from the data for the group as a whole, with no identifying characteristics.

4. Will respondents be motivated to answer accurately?

- Aside from sensitive subjects, there are additional items that survey takers may not be motivated enough to answer. If too many of these items exist in a survey, the survey itself may not be completed.

- Items that don't require an answer from everyone. For example, the question Do you fertilize vegetables in your garden? does not apply to people who don't garden or those who grow only herbs.

- Avoid asking every survey taker to answer questions that may not apply to them.

- This type of question can cause frustration and may unintentionally promote skipping other items. Instead, use skip instructions to direct survey takers to appropriate sections of the survey (see Savvy Survey #7 for more information on formatting skip patterns).

- Items for which respondents will not have an accurate, ready-made answer.

- An item such as Explain why you either agree or disagree with the statement that farmers are more likely to cause nitrate pollution than homeowners asks the survey taker to provide a detailed response that he or she may not have the motivation or ability to easily provide.

- Reluctance may be compounded by the number of items the respondent has already been asked to complete within the survey (survey fatigue).

- Poorly constructed questions that are too long or too difficult.

- The question How would you rank the importance of the following 20 BMPs for use in managing your farm? asks the survey taker to look through 20 different Best Management Practices (BMPs) and then to rank them according to importance.

- This action can be mentally taxing and time consuming. This task may impact the survey taker's motivation to complete not only this item, but also the remainder of the survey.

Making Appropriate Text and Wording Choices

Regardless of the type of question being used (open- or closed-ended), there are several basic ways a questionnaire designer can make each item easier to understand.

- Use simple words to increase understanding (Dillman et al., 2014).

- For example, use honest instead of candid, free time instead of leisure, most important instead of top priority, or work instead of employment.

2. Avoid jargon or abbreviations unless the term is widely understood by the group of individuals being surveyed.

- Extension professionals in Florida will be familiar with the abbreviation EPAF or the idea of achieving parity within served clientele, so using these terms in a survey that targets Extension agents as a population would be appropriate.

- However, a member of the general population may have no clue what EPAF or parity means, so using the term anywhere in the survey has the potential to confuse the survey taker, which is never desired.

3. Avoid using vague terms that may have multiple or individually defined meanings.

- For example, regularly (does this mean daily, weekly, or what?), government (is this state, local, or federal?), or older people (how old is "older"?)

- Instead, use specific descriptors.

- Initial version: During the summer months, how regularly do you water your lawn?

- Improved revision: During the summer months (June, July, and August), how many days per week do you water your lawn on average?

4. Phrase questions in a way that the survey taker must give more precise information.

- Don't ask them to choose from a vague list of adjectives; instead, have them provide specific information, when it is possible, to accurately recall the information.

- For example, During the summer months (June, July, and August), how many days per week do you water your lawn on average?

- Initial version: never, rarely, occasionally, regularly—these are poorer choices because two people from the same sample may have vastly different interpretations of what these terms mean in this context.

- Improved revision: have the survey taker respond with a specific number (times per week), or provide specific categories for them to choose from (I never water during summer months, 1-2 days a week, 3-4 days a week, 5-6 days a week, I water every day during summer months).

5. Precise information is valuable. However, it is also important to avoid questions that ask for more precise information than the survey takers are able to provide.

- For example, most survey takers would be unable to accurately recall and state the number of articles they've read in the past year pertaining to gardening.

- Also, be sure to use an appropriate time reference:

- sometimes recalling the number of times in a year is possible (e.g., number of visits to the dentist)

- sometimes a week is more appropriate (e.g., number of times watering the lawn)

6. Use complete sentences.

- Initial version:Years farming:

- Improved revision: How many years have you been farming? years

7. Use shorter questions when appropriate.

- For mail and online surveys, it is possible to shorten questions by leaving answers out of the question stem or focusing on a key idea for each question.

8. Soften questions that may be perceived as sensitive information for the taker by providing a range to choose from.

- For example, consider response options for the question What was your gross income in 2013?

- Poor choice: asking the survey taker to fill in a specific number dollars

- Better option: provide specific, but broad categories for them to choose from (Less than $15,000, $15,000 to $29,999, $30,000 to $44,999, $45,000 to $59,999, $60,000 and above), making sure that the middle category contains the median income for the population of interest.

9. Make responses mutually exclusive.

- Response options should not overlap nor should multiple correct answers exist.

- e.g., Less than $15,000, $15,000 to $30,000, $30,000 to $45,000, $45,000 to $60,000, $60,000 and above

- These categories represent a poor construction since someone with an income of $30,000 has two potential categories that could be selected.

- This overlapping does not simply occur with redundant numbers, but can also occur with concept categories as well.

- For the question How did you first hear about the [Extension program]?, the categories—on the radio, in my office, from a co-worker, in the car, from a billboard, from a family member, from my agent—would represent a poor construction since someone may have heard an ad on the radio while in the car, and would thus have two potential categories that could be selected.

- It is important when selecting categories to make sure that this type of overlapping does not occur. If it is important to find out both the location of the contact (car, UF/IFAS Extension office, home, business) and the contact medium (radio, billboard, poster, word-of-mouth), it would be best to ask these items in two separate questions.

10. It is highly recommended that surveyors avoid asking respondents to Check All That Apply since these questions present many analysis issues. Refer to the Savvy Survey #6c for more information on better ways to solicit this type of information.

11. Avoid asking two questions in one (i.e., double-barreled questions).

- For example, the question, Have you made changes in your attitude and behavior toward the environment based on information from the Florida Friendly Landscaping Program? is actually two separate questions. It asks about change in attitude and change in behavior, which are two different concepts.

12. Ask questions that respondents have the information to answer.

- Initial version: Are the current operating hours of the UF/IFAS Extension office satisfactory for your needs? Some respondents might not know what the office hours are in this situation.

- Improved revision: The UF/IFAS Extension office is open 8 a.m. to 5 p.m. on Monday through Friday. Are these operating hours satisfactory for you? This additional layer of information provides details that will paint a complete contextual picture for the respondent and reduces potential confusion or frustration.

13. Use multiple questions to capture complex behaviors.

- It is impossible to truly capture the nature of a complex behavior based on a response to one question. For example, asking farmers Did you use IPM last year? with Yes, No, and Don't Know response options is a poor construction, because IPM includes a number of BMPs.

- Instead, use multiple questions to identify relevant aspects of the behavior. For example, asking farmers whether they scout their fields for pests, establish threshold levels for applying pesticides, and perform other specific practices can lead to a more accurate measure of IPM behavior.

Practice Makes Perfect

There is both an art and a science to developing high quality questionnaires. The science lies in addressing certain criteria, like the research-based guidelines mentioned above, in order to create an instrument that will produce reliable and valid results. However, this process is not as simple as adding A + B to get C. Instead, to get a high-quality piece, the survey designer must shape and rework the items within the instrument in order to make sure that each element is meaningfully crafted for the intended audience and intentionally placed within the instrument.

Many of the criteria mentioned above provided both poorly constructed and better examples. It is a best practice to draft a set of questions and then rework the items once the survey is "complete" to make sure that each item fits appropriately within the flow, layout, and objectives of the overall questionnaire. It is also highly recommended that questionnaires be reviewed by peers or state evaluation specialists in order to generate a dialogue about the items being included and to enhance the quality of the overall survey.

In Summary

Developing a high quality questionnaire is a critical step in collecting useful data for assessing program needs and evaluating the outcomes of programs. The items that are used to collect information must be carefully constructed so that those taking the survey have the ability to answer as easily and accurately as possible. This publication began the discussion of how to improve this step in the survey design process by identifying the types of information that can be captured in a questionnaire, the parts of a question, and the basic considerations to make regarding word and text choices. Related publications will continue with this topic by examining how to construct open-ended and close-ended questions for a questionnaire.

References

de Leeuw, E. D., Hox, J. J., and Dillman, D. A. (Eds.). (2008). International handbook of survey methodology. New York, NY: Lawrence Erlbaum Associates.

Dillman, D. A., Smyth, J. D., and Christian, L. M. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method (4th ed.). Hoboken, NJ: John Wiley and Sons.

Groves, R. M., Fowler, F. J., Couper, M. P., Lepkowski, J. M., Singer, E., and Tourangeau, T. (2009). Survey methodology (2nd ed.). Hoboken, NJ: Wiley.