Introduction

Continuing on in the Savvy Survey Series, this fact sheet is one of four that provide an introduction to important aspects of developing a questionnaire. A survey that provides useful information requires careful design and attention to detail. Several sources provide in-depth information on developing surveys (Dillman, Smyth, & Christian, 2014; de Leeuw, Hox, & Dillman, 2008). This publication focuses on the process of developing survey questions to help ensure that the "right" questions are included in the questionnaire.

Based on the experience of the authors in creating numerous survey instruments, one useful process for developing a high-quality questionnaire follows four steps.

- Brainstorm questions on a topic.

- Review the literature for concepts, questions, and indices.

- Collect ideas for questions and wording choices through focus groups or key informant interviews.

- Select questions linked to a program's logic model.

Each of these steps is discussed in more detail below.

Step 1. Brainstorming Questions

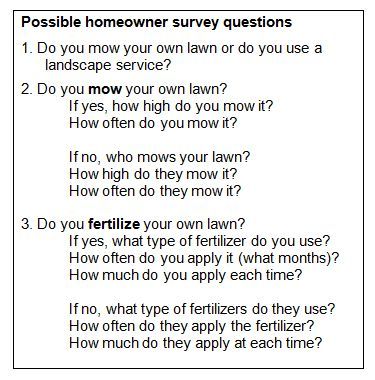

Many questionnaires have their roots in a brainstorming session in which the project team works to develop a list of questions related to the topic at hand. One benefit of brainstorming is that a large number of questions can be generated very quickly. Brainstorming can work very well with small and medium-sized groups (6 to 10 people) to develop a set of questions to build into a questionnaire. For example, Figure 1 shows some of the questions agents developed during a brainstorming session for a landscape maintenance survey designed for homeowners living in neighborhoods with homeowners associations.

On the other hand, if only the brainstorming process is used to create the question, then three problems can occur. First, important questions might be omitted because no one thought of them at the time. Second, questions that are irrelevant or tangential to the main topic might be included. And third, the organization of the questions can be haphazard because of the nature of the brainstorming process.

Step 2. Reviewing the Existing Literature for Concepts and Questions

To avoid omitting important questions or including irrelevant ones, a best practice is to consult studies published on the topic area. Journal articles are a good source of ideas about concepts that researchers are measuring and have found to be important. Frequently, the questions used to measure a concept are included in an appendix. If not, most authors are willing to share a copy of the questionnaire when asked.

Studies published in peer-reviewed journals often include a set of questions that form an index or scale to measure people's knowledge or attitudes related to a subject area (along with estimates of their reliability and validity). For example, one might want to measure attitudes about the environment for a needs assessment study (because these attitudes are seen as a precursor to behavioral intent and subsequent behaviors, see Hines, Hungerford, & Tomera, 1987). Or, one might want to measure change in those attitudes before and after an environmental education program. In either case, existing, well-developed measures (see Dunlap & Van Liere, 1978; Dunlap et al., 2000) may be used as-is or modified slightly to fit the survey situation. Finally, collections of scales on many topics have been published in books (see, for example, Miller & Salkind, 2002).

Step 3. Collect Ideas for Questions and Wording Choices through Focus Groups or Key Informant Interviews

Collecting information though focus groups or key informant interviews can help to identify important questions for the survey. Interviews can also reveal how people think about the survey topic and the words that they use to express their ideas. Using this information can help ensure that questions are asked in a meaningful way. Moreover, talking with people who have different perspectives, such as residents, homeowner association officers, and landscapers in the example of the homeowner maintenance survey described above builds a broader foundation for developing a respondent-friendly questionnaire.

Step 4. Selecting Questions Based on Logic Models

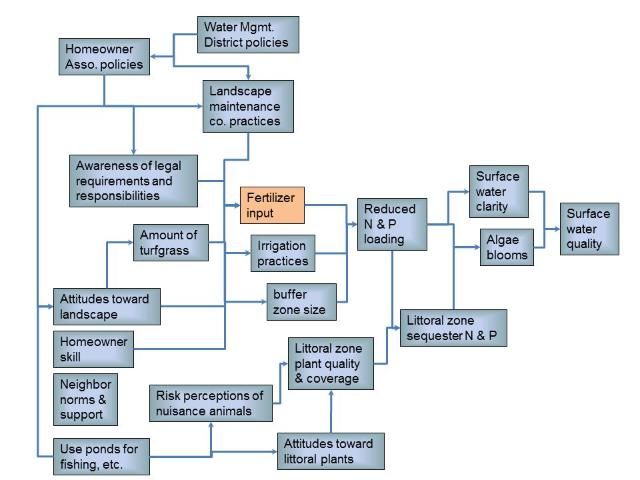

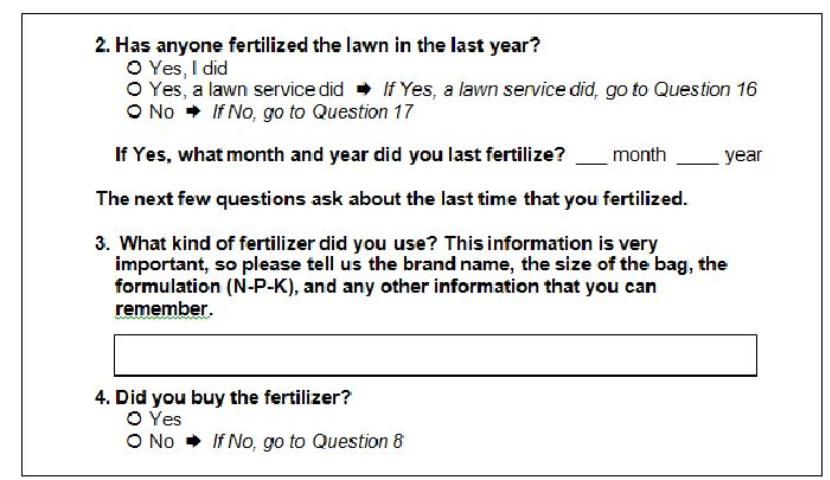

Developing a logic model for a program is a recommended best practice by program planners and evaluators (Israel, 2001; 2010). However, logic models are also useful for ensuring that questions in the instrument are relevant and organized in a logical sequence. This is especially true when the logic model displays key elements of the program's context, major activities, the sequence of outcomes, and the causal connections between each part (see Figure 2 for an example of a logic model that shows the causal pathways of the outcomes, and that was used to develop a successful homeowner survey). In this example, each component in the model was used to identify questions to be included in the instrument and to determine whether the questions identified during the brainstorming session were relevant. This led to the selection of a series of questions to collect information about homeowners' fertilizer use (some of these questions are shown in Figure 3).

In Summary

Developing a high-quality questionnaire is a critical step for collecting useful data for assessing program needs and evaluating the outcomes of programs. By following a process of brainstorming questions, reviewing relevant research studies, collecting questions and wording choices through interviews, and linking questions to a logic model, one can create a set of appropriate questions. In the final selection process, it is also a good practice to use previously developed measures and to focus on measuring a few things well rather than trying to measure too many things and possibly sacrificing accuracy.

References

de Leeuw, E. D., J. J. Hox, & D. A. Dillman, Eds. (2008). International handbook of survey methodology. New York, NY: Lawrence Erlbaum Associates.

Dillman, D. A., J. D. Smyth, & L. M. Christian. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method. (4th ed.). Hoboken, NJ: John Wiley and Sons.

Dunlap, R. E., & K. D. Van Liere. (1978). The "new environ- mental paradigm": A proposed measuring instrument and preliminary results. Journal of Environmental Education, 9, 10–19. https://doi.org/10.1080/00958964.1978.10801875

Dunlap, R. E., K. D. Van Liere, A. G. Mertig, & R. E. Jones. (2000). Measuring endorsement of the new ecological paradigm: A revised NEP scale. Journal of Social Issues, 56(3), 425–442. https://doi.org/10.1111/0022-4537.00176

Hines, J. M., H. R. Hungerford, & A. N. Tomera. (1987). Analysis and synthesis of research on responsible environmental behavior: A meta-analysis. Journal of Environmental Education, 18(2), 1–8. https://doi.org/10.1080/00958964.1987.9943482

Israel, G. D. (2001). Using logic models for program development. Gainesville: University of Florida Institute of Food and Agricultural Sciences. https://edis.ifas.ufl.edu/WC041

Israel, G. D. (2010). Logic model basics. Gainesville: University of Florida Institute of Food and Agricultural Sciences. https://edis.ifas.ufl.edu/wc106

Miller, D. C., & N. J. Salkind. (2002). Handbook of research design and social measurement. (6th ed.). Los Angeles, CA.