Introduction

This is the fifth of seven publications in the Streaming Science (https://streamingscience.com/) EDIS series about using mobile instructional and communication technologies (ICTs) for outreach and engagement with your target audiences. This publication is intended as a guide for communication and education professionals to use various virtual reality (VR) hardware and software to create immersive online content about agricultural and natural resources spaces and places to communicate, teach, and engage with scientific content.

VR for Science Engagement

VR is no longer an expensive, computer-generated, animated environment that only computer programmers could develop. Rather, VR methods now also include virtual worlds created through affordable 360º photographic imagery (Kuchera, 2020). VR is often a computer-mediated environment and experience, in which participants view a three-dimensional, immersive, programmed, virtual world or 360º images (Meinhold, 2020). You can use consumer-grade, smaller-scale cameras and cloud-based stitching and viewing software to create cost effective VR tours for target audiences (Mabrook & Singer, 2019; Nagy & Turner, 2019).

The military, education, art, architecture, entertainment, and healthcare fields have all used VR for a variety of purposes (Meinhold, 2020). Research has shown VR is effective to engage diverse audiences in learning about climate change and conservation, as well as to promote pro-environmental attitude and behavior change (Boda & Brown, 2020; Hsu et al., 2018; Markowitz et al., 2018; Schott, 2017). In place-based education, outdoor instruction connecting learners to the environment should ideally take place in-person (Smith & Sobel, 2010). However, VR can “take” viewers to locations they may not otherwise visit as well as introduce them to experiences they may not encounter in real-life (Bailenson, 2018). You should consider leveraging VR in place-based education to introduce target audiences to agricultural and natural labs and landscapes to better understand scientific processes and concepts such as climate change and substantiable solutions.

Streaming Science VR Approach

In 2018, Streaming Science launched Labs and Landscapes (Figure 1; Stone et al., 2022) to showcase the various VR tours science communication college students created through project-based learning courses in partnership with the platform.

Credit: Jamie Loizzo, UF/IFAS

Students have created 360º tours about forest conservation, prescribed burning, university gardens and food pantries, impacts of COVID-19, and museum exhibits to share with online audiences. Additionally, students working with Streaming Science have deployed and tested their VR tours with public audiences in informal and formal learning settings. A graduate student developed her thesis in partnership with a natural resources conservation organization to evaluate VR tour impacts on youths’ estuary learning (Barnett, 2022). Streaming Science also partnered with two other universities on a national grant to develop VR tours about agricultural and natural resource settings and careers for college and high school students.

Hardware and Software

Streaming Science has created VR projects in three different cost and quality levels: 1) mobile, 2) mid-range, and 3) high-end. The mid-range and high-end quality categories provide users the capability to input information points, so these require further steps and understanding of the software. The following sub-sections outline hardware for image capture, software for stitching and publishing, and project examples for each VR level.

Mobile VR

- Image Capture

The cameras on mobile phones and tablets have become increasingly more sophisticated and developed, making it convenient for you to take high quality photos in the classroom, lab, or field. At the most basic level, most smartphone camera apps can capture a panorama image while other apps need to be downloaded to take spherical photos. For example, Panorama 360 on iPhone or Cardboard Camera on Android can also take spherical photos. Note that mobile apps are constantly changing, and some of these come at a low cost or may require in-app purchases.

2. Publish

Panoramic photos from a phone’s camera roll or spherical images from an app can be uploaded directly to Facebook. The social media platform then converts the photo to a fully immersive image for friends and followers to explore. You can add panoramas and spheres to the Google Cardboard mobile app for sharing with others who have the app or add to Google Maps by contributing the image to a pinned location. Expeditions Pro is also available for creating VR tours in web browsers and as an app on Apple and Android smartphones.

3. View

No special equipment is necessary to view most panorama and spherical images. Most of the images can be viewed on a mobile device or desktop in a web browser and can be navigated by simply scrolling to move around the image at different angles. To create a more immersive viewing experience, you can use a Google Cardboard headset with a smartphone to access images on Facebook or the Cardboard or Expeditions app. This allows you to view the pictures in horizontal mode with split screen, side-by-side, mirror images (one for each eye). Google Cardboard headsets can be purchased from a variety of online vendors individually or in bulk at affordable rates.

Project Examples:

- Undergraduate and graduate students in an agricultural and natural resources communication course in the University of Florida’s Department of Agricultural Education and Communication (UFAEC) used the Google Streetview app on their phones to create photo spheres of numerous locations within UF. Google Streetview was a popular, user-friendly app for taking point-and-shoot spherical photos. However, Google discontinued the app in early 2023. Below is an example of photo spheres, which students created of the UF College of Agricultural and Life Sciences (CALS) Field and Fork Farm and Gardens and published to Google Maps:

- Students also used Google Streetview to create photo spheres of the university’s Natural Area Teaching Laboratory (NATL), a 60-acre wooded and wetland ecosystem for teaching and research:

Mid-Range VR

- Image Capture

To move beyond mobile to the mid-range VR level, you will need to purchase a camera specifically intended to capture 360º images. Multiple cost-effective options are available, including the Ricoh Theta, GoPro Max, or GoPro Fusion 360º cameras. These cameras typically range from $300 to $1,500 in price. Both brands typically come with the option of purchasing additional accessories and varying storage space to improve your filming experience (e.g., tripods, reusable battery packs, stand weights, rigs, water housing, etc.). The GoPro 360º Max and Fusion cameras are submersible in shallow water up to 16 feet. You can control the cameras manually or remotely by an app on your mobile device. These will take a 360º image of the area with the push of a button, and you will often need to hide in the background to ensure the camera is working and to blend into the scene.

2. Publish

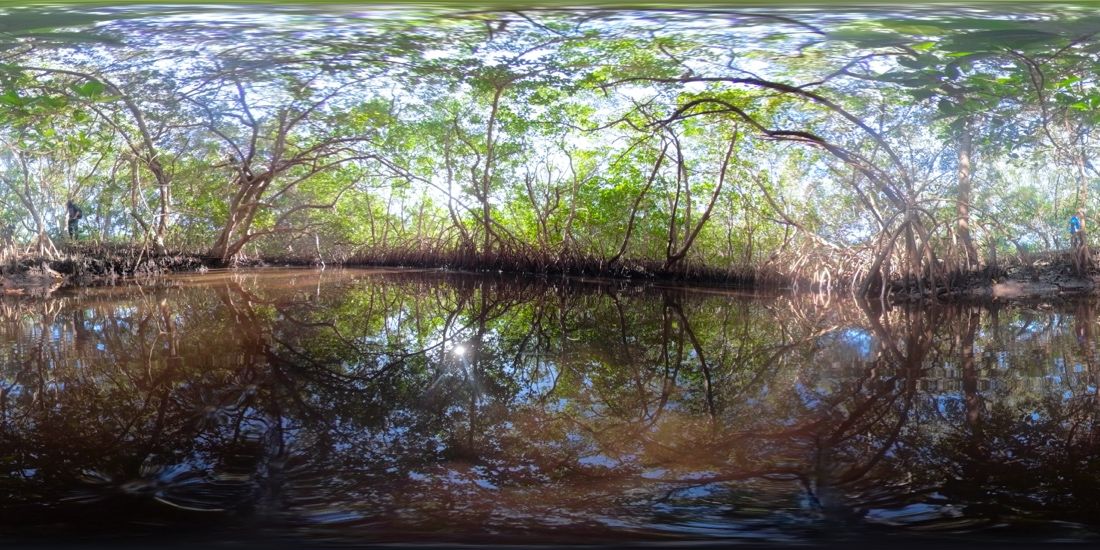

Images are stored on SD memory cards inserted into the camera, and these can be uploaded to your computer. Initially, the photos will look similar to compressed, two-dimensional, panoramic photos (Figure 2) until placed into a stitching software or on a host website such as Theasys 360º Virtual Tour Creator.

Credit: Caroline Barnett, UF/IFAS

VR host sites typically come at a cost with monthly and/or yearly subscriptions. The Theasys VR editor includes the option to add clickable hotspots to your 360º images, hotspot editing, transitions, effects, font icons, custom images, 3D text, drawing tools, map and floor plan indicators, background sounds, and more. Subscriptions for Theasys range from $0 per month for up to five panoramas to $20 per month for unlimited image uploads. Subscriptions must be upheld to keep tours viewable on the host website.

3. View

VR tours hosted on sites such as Theasys are often viewable on smartphones, tablets, and/or computer desktop web browsers. The final tours are accessible via hyperlinks and viewable on the host website’s domain. On touchscreens, users can navigate the tours with their fingers or their mouse to swipe around the area and to tap on hotspots for more information or other included media. Theasys also offers the option, in the top right corner of the screen, to click on the goggle viewer icon and split the screen into right and left-eye visuals for portable VR headsets. Finally, while not required, you should consider providing VR tour facilitators and your target audiences with a downloadable written guide that includes learning objectives, location descriptives, hotspot information, a list of links to videos and websites featured within the tour, and references.

Project Examples:

- University of Florida/Institute of Food and Agricultural Sciences (UF/IFAS) Nature Coast Biological Station (NCBS): A tour of the UF/IFAS research and extension station located in Cedar Key, FL, focused on marine and coastal resource management and conservation.

- Ecology of an Estuary: A tour of the Tampa Bay estuary system in partnership with the Tampa Bay Estuary Program (TBEP). Created by author Barnett (2022) for her UF master’s student thesis research. Further tour information and accompanying guide can be found here.

High-End VR

- Image Capture

VR has advanced beyond stitching individual pictures together to higher quality 3D spatial scanning technology. The Matterport Pro 2 camera with tripod ($3,800; camera on left side of Figure 3) can capture scans of buildings, walkways, or any indoor space with a mostly closed 3D structure. The Leica BLK360 G1, the Leica BLK360 G1 Tripod Adapter, and a tripod ($5,600; camera on right side of Figure 3) together can scan any outdoor space, but the camera functions best on overcast days or when the sun is low in the sky (morning or late afternoon) to avoid overheating. The Matterport and Leica cameras connect to a tablet or smartphone via Bluetooth, and the Matterport app operates the cameras and captures the scans. Indoor and outdoor scans can be stitched together, and multiple stories can be scanned to create a multi-level floor plan.

Credit: Jamie Loizzo, UF/IFAS

2. Publish

After scans are complete, the app creates a layout to upload on the Matterport website for editing. This upload can take several hours, depending on the size of the scanned area and how many scans were captured. You can edit the scans with tags and pop-up information including text, videos, and links to websites for audiences to learn more about a location and its features. Adding narration can create a guided tour experience. Once editing is finished, the completed VR tour can be shared with others via a variety of formats: a) password protected, b) unlisted, or c) public. Coding language is also provided to embed the tour into websites. Matterport tour hosting requires a subscription. Depending on the number of tours housed, you can expect to pay $120 (five tours) to $480 (20 tours) per year.

3. View

The tours can be viewed using a smartphone, tablet, computer, Google Cardboard, or an Oculus headset. Viewers can move through the tour using the small white circles located on the ground of the image. The Matterport website also provides features that increase the ease of moving through the tour including a dollhouse view, floor selector, and measurement mode. The dollhouse view shows the floor plan in 3D layers to give a better view of the overall layout. The floor selector can bring the viewer to any floor in the tour. Measurement mode allows users to check distances between objects. You should also consider providing a downloadable print guide for high end VR tours, so your target audience can easily implement the technology and content in their setting.

Project Examples:

- UF/IFAS Center for Aquatic and Invasive Plants (CAIP): A tour of the center’s indoor offices, lab, and outdoor research mesocosms for invasive plant experiments.

- UF/IFAS NCBS: An additional tour of the research station’s three floors and outdoor boat dock located in Cedar Key, FL.

- Hitchcock Field and Fork Pantry: An informational tour of a pantry located on the University of Florida campus. The tour details how the pantry operates and what items it typically offers.

- Fantastic Fossils Exhibit at the Florida Museum of Natural History: A walk through an exhibit that showcased fossil research, collections, interactive paleontology art, and included a live vertebrate paleontology lab for visitors to engage with paleontologists, students, and volunteers.

Content Development and Implementation

Streaming Science has followed intentional media production and instructional design steps to create VR tours and recommends following the outlined methods below:

- Identify your target audience(s), topic, learning objectives, location, and expert(s).

Determine who the intended viewers of the VR tour will be. What are their demographics including ages, backgrounds, prior knowledge levels, locations, and more? Select a topic that will interest the target audience to learn more and increase their level of awareness, science literacy, and/or understanding, as well as encourage connection to place and changes in attitude, behavior, or policy. Develop learning objectives for the VR tour based on Bloom’s Taxonomy categories of remember, understand, and apply (Krathwohl, 2002).

Consider developing a tour of a location where the target audience cannot normally access it either from lacking close proximity or physical ability. Some examples include agricultural operations, farms, laboratories, research and education centers, and natural resource landscapes. Identify at least two to three subject matter experts and partner with them to develop accurate tour content.

2. Plan your visuals and outline informational points.

Visit potential physical field sites for a VR tour before taking any photos or scans to scout ideal locations that are visually appealing and accurately match the subject matter and learning objectives. You should also identify ideal lighting and camera placement. Make sure to gain permission for taking images of privately-owned locations. Consent is not necessary for public spaces under U.S. privacy law.

Determine how the different scenes within a tour will align to tell a story and how users will navigate the spaces. Consider how tagged, pop-up content or narration will flow to include a beginning, middle, and end. Create a list of what videos, links, and photos exist or should be created to explain key information points within the tour. Develop interview questions ahead of time to be prepared to record conversations with experts for videos content. Ideally, it can take one or two crew members up to two days to efficiently capture needed materials for high-quality VR tour creation, depending on the size of the tour site. One day is scheduled to capture 360º scans/photos, and a second day is for recording interviews and two-dimensional photos/media as pop-up content within the tour.

3. Produce and publish your VR tour and supplemental material(s).

A VR tour can take several weeks to fully produce. Start with making edits to the images such as straightening horizon lines, making color corrections, and adjusting brightness, shadows, and contrast. Adjust the immersive images to be environmentally accurate and visually appealing before preparing the informational content in the next step. Review the recorded conversations and interviews with experts for informational content. Then edit the video interviews to include shortened soundbites for pop-up content points within the tour. Consider posting the videos to YouTube and linking them to the tour for ease of viewing, smooth streaming, and reducing file size of the VR tour. Also, make sure to include captions with videos for accessibility. Add text, photo, and website links to credible science-based sources throughout the tour’s tagged pop-ups; proofread and test all content and navigation; and have subject-matter experts review the tour for accuracy and make their suggested corrections before publishing.

It is recommended to share the tour via a website, email listserv, social media, and potential in-person channels that are applicable to your target and public audiences who may be interested. Develop and include a written guide on the website alongside the tour with implementation suggestions for how to view the tour. The guide should have an introduction, tour context, learning objectives, and summarized lists of key points found in each visual scene. That way, educators using the tours with groups of learners can prepare ahead of time to then facilitate and guide the VR experience, maximizing engagement and learning of key concepts.

4. Implement and assess your VR tour.

When using VR with audiences, you can develop a survey, facilitate a discussion, or interview participants about their experiences, learning, attitude change, connection to place, and/or behavior intentions. Assessment methods like these can provide insights into how the audience experienced the technology and virtual spaces, their thoughts on the quality of the images and content, impacts on attitudes toward issues presented in the VR, and whether the tour met its learning objectives. Streaming Science has typically implemented post-retrospective surveys and post-interviews with participants. The results have been used to improve the next VR tour iteration, content development, and delivery.

Summary and Additional VR Collaboration

VR tours are an innovative way to engage your target audience in immersive online place-based communication and education to connect them with agricultural and natural resources spaces and places they may not otherwise encounter. There are low-cost, mid-range, and high-end ways to capture, publish, and view 360º images. Science communication college students use a variety of hardware and software to create, implement, and research Streaming Science VR Labs and Landscapes tours about a myriad of agricultural and natural resources topics such as living shorelines, estuaries, forest conservation, food insecurity, museum exhibits, and more to increase viewers’ content knowledge, connection to place, and pro-environmental behavioral intentions.

Extension can utilize the steps outlined in this publication to invest in VR hardware and software at different budget levels. Educators, communicators, and content experts can work together using the outlined steps as a guide to plan, create, and assess VR tour impacts. Across the country, several land grant universities are leveraging VR to reach target audiences with agricultural and natural resources content. An example of large-scale VR collaboration and an additional resource for UF/IFAS is:

iVisit: Interactive Virtual Tours for Advancing Food and Agricultural Sciences

The iVisit project, supported by funding from the United States Department of Agriculture’s (USDA) Higher Education Challenge Grant (award no. 2021-70003-35432), is focused on creating 3D spaces related to food and agricultural sciences (FAS). The iVisit team is led by Texas Tech University with partners at Kansas State University and the University of Florida (including Streaming Science). The team captures VR scans of different FAS systems, techniques, and facilities unique and specific to their region. The intention is to produce a more immersive and interactive experience for students beyond a Zoom call or visit to a website. These interactive virtual tours (IVTs) give college and high school students the opportunity to explore spaces they may otherwise never get to visit, from their home or school. Along with the IVTs, lesson plans and supplemental learning material are provided for teachers to guide students through the spaces and learn about different FAS facilities and practices. The team conducts research on IVT adoption and impacts on students’ connection to place, agricultural and natural resources learning, and career interest. Visit the site to learn more, contact the researchers, and use the IVTs with your target audience: https://theivisitproject.com/

References

Bailenson, J. N. (2018). Experience on demand: What virtual reality is, how it works, and what it can do. W.W. Norton & Company.

Barnett, C. P. (2022). Influence of charismatic animals on youth environmental knowledge and connection to water through the application of virtual reality tours. [Master’s thesis, University of Florida]. https://original-ufdc.uflib.ufl.edu/UFE0059262/00001

Boda, P. A., & Brown, B. (2020). Priming urban learners’ attitudes toward the relevancy of science: A mixed-methods study testing the importance of context. Journal of Research Science Technology, 57, 567 – 596. https://doi.org/10.1002/tea.21604

Hsu, W. C., Tseng, C. M., & Kang, S. C. (2018). Using exaggerated feedback in a VR environment to enhance behavior intention of water-conservation. Educational Technology & Society, 21(4), 187-203.

Krathwohl, D. R. (2002). A revision of Bloom’s Taxonomy: An overview. Theory Into Practice, 41(4), 212-218. https://doi.org/10.1207/s15430421tip4104_2

Kuchera, B. (2020, October 20). The VR revolution has been 5 minutes away for 8 years: What keeps virtual reality stuck in the future? Polygon. https://www.polygon.com/2020/10/20/21521608/vr-headsets-pricing-comfort-virtual-reality-future

Mabrook, R., & Singer, J. B. (2019). Virtual reality, 360º video, and journalism studies: Conceptual approaches to immersive technologies. Journalism Studies, 20(14), 2096-2112. https://doi.org/10.1080/1461670X.2019.1568203

Markowitz, D. M., Laha, R., Perone, B. P., Pea, R. D., & Ballenson, J. N. (2018). Immersive VR field trips facilitate learning about climate change. Frontiers in Psychology, 9(2364). https://doi.org/10.3389/fpsyg.2018.02364

Meinhold, R. M. A. (2020). Virtual reality. In Salem Press Encyclopedia of Science.

Nagy, J., & Turner, F. (2019). The selling of virtual reality: Novelty and continuity in the cultural integration of technology. Communication, Culture, & Critique, 12(4), 535-552. https://doi.org/10.1093/ccc/tcz038

Schott, C. (2017). Virtual fieldtrips and climate change education for tourism students. Journal of Hospitality, Leisure, Sport & Tourism Education, 21, 13-22. https://doi.org/10.1016/j.jhlste.2017.05.002

Smith, G. A., & Sobel, D. (2010). Place- and community-based education in schools. Routledge.

Stone, W., Loizzo, J., Aenlle, J., & Beattie, P. (2022). Labs and landscapes virtual reality: Student-created forest conservation tours for informal public engagement. Journal of Applied Communications, 106(1), 1-17. https://doi.org/10.4148/1051-0834.2395

Appendix A: Streaming Science Series Overview

Streaming Science #1: An Introduction to Using Mobile Devices for Engagement with your Target Audience

Introduces the Streaming Science platform, the mobile technologies students have used to contribute work to the Streaming Science platform, and an overview of types of content created for Streaming Science using mobile technologies.

Streaming Science #2: Using Webcast Electronic Field Trips for Engagement with your Target Audience

Describes the webcast electronic field trip (EFT), how Streaming Science has used the webcast EFT format, and considerations for using this type of instructional and communication technology.

Streaming Science #3: Using Scientist Online Electronic Field Trips for Engagement with your Target Audience

Describes the Scientist Online EFT, how Streaming Science has used the Scientist Online EFT format, and considerations for using this type of instructional and communication technology.

Streaming Science #4: Using Podcasts for Engagement with your Target Audience

Describes podcasting, how Streaming Science has used podcasting, and considerations for using this type of instructional and communication technology.

Streaming Science #5: Using Virtual Reality Tours for Engagement with your Target Audience

Describes virtual reality, how Streaming Science has used virtual reality, and considerations for using this type of instructional and communication technology.

Streaming Science #6: Using Google Classroom for Engagement with your Target Audience

Describes Google Classroom, how Streaming Science has used Google Classroom to host a community of practice, and considerations for using this type of instructional and communication technology.

Streaming Science #7: Using Evaluation to Assess Engagement with your Target Audience via Mobile Technologies

Describes how Streaming Science has used evaluation measures to determine engagement with target audiences through mobile technologies.