Introduction

Extension faculty have many responsibilities serving the public and developing ways to educate and inform their audience. Included in an agent’s job responsibilities is evidence of impact that demonstrates the usefulness and worth of the initiative. Impact is shown through evaluation. Even though evaluation is an important part of their job, most county faculty are usually provided limited training on evaluation. Because of other competing demands on their time, agents usually defer learning about evaluation until they need to do their annual report. An assessment of UF/IFAS Extension agents’ evaluation skills found that county faculty were good at evaluating short-term outcomes and professional practices (e.g., including stakeholders, respect for customers, using standards with evaluation) (Lamm, Israel et al., 2011). While only about half of the county faculty assessed behavioral changes, the study suggested that they could improve their evaluation of medium- and long-term goals of their programs (Lamm, Israel et al., 2011). In 2020 about 31% of county faculty report assessing behavioral changes (Diane Craig, personal communication, April 2, 2021, and 2020 ROAs). The measurement of outcomes is critical to estimating the social, environmental, and economic impacts of Extension activities on communities and individuals. Lamm, Harder and colleagues (2011) knew from experience and from engaging with Extension agents that the current model of professional development, which aimed to increase all Extension faculty members’ evaluation competencies, was not meeting the larger need of high-quality data for accountability reporting.

Because of the need to improve the quality of evaluation, a new model for Extension, the Evaluation Leadership Team (ELT) Model, was proposed (Lamm, Harder et al., 2011). This model is a team-based approach to evaluation in which one team member within a programmatic team or priority work group (teams that are based in the same topics or geography) will be the evaluation lead or liaison. This provides the opportunity for one person to learn in-depth evaluation skills and use their knowledge to help create evaluation plans for programs in their group and help county faculty implement more rigorous evaluations of their programs. This would allow the other Extension agents in the priority groups to have more time focused on other aspects of programming than evaluation. This publication will discuss the importance of evaluation, the skills and competencies needed for evaluation, details about the ELT model, possible problems and solutions to implementation of the model, and improvements in Extension programing evaluation that could develop with the use of this model.

Why do we need evaluation?

Evaluation is needed for all programs, plans, projects, products, and processes to assess how well they are working and to show their value (Israel, Diehl, & Galindo-Gonzalez, 2009). Evaluation is essential for showing the social and public value of Extension programs (Ghimire & Martin, 2013) and stakeholders’ return on investment (Kluchinski, 2014), and it is considered an essential competency in Extension to create quality programs (Narine & Ali, 2020). Benefits of evaluations to individuals working within Extension include being recognized for their achievements, establishing a level of accountability, improving plans and programs, and making beneficial programs for their community (Flack, 2019; Narine & Ali, 2020). Evaluations also provide data to put in workload reports. Formal evaluations are needed to document the outcomes and impacts of programs in Extension to provide the proof of these programs’ value. As the saying goes, “if it is not documented, then it did not happen.”

A good program evaluation assesses knowledge gain, skill development, or behavioral impacts in both the short and long term. In addition, a good program-level evaluation can show impact on social, economic, and environmental conditions in the community or participants. However, developing high-quality evaluations requires skills and competencies that can take a considerable amount of time and commitment to develop.

Evaluation Competencies and Development of These Skills

The American Evaluation Association (AEA) (AEA Evaluator, n.d.) developed a set of competencies that evaluators should have to appropriately conduct a program evaluation. These are organized into five domains:

- Professional practice skills help show evaluation as a distinct professional practice by using systematic evidence for evaluation; being ethical (through integrity and respect); selecting appropriate evaluation approaches and theories; looking for ways to improve the practice through personal growth; continuing education; and identifying how evaluation can help improve society and advocate for the evaluation field.

- Methodology involves the technical parts of evaluation or the processes that should be followed for high-quality evidence-based evaluation. First the evaluator needs to identify purpose or need and the evaluation questions, then design a credible and feasible evaluation. This is done through selecting the method (qualitative, quantitative, or mixed methods) and justifying it; identifying assumptions and program logic; reviewing literature; identifying evidence sources and sampling procedure; involving stakeholders in the process; and then collecting and analyzing data, interpreting the findings and drawing conclusions from this evidence.

- Context refers to the need to understand and respect the uniqueness of each evaluation situation. There needs to be an understanding of different perspectives, cultural competency, setting, participants, stakeholders, and users for each evaluation. The environment, setting, stakeholders, participants, organization/structure, culture, history/traditions, values/beliefs of the program and its community need to be understood to pick diverse participants and stakeholders to include in the process. Then these should be described in the findings and conclusion to help communicate and promote the use of the evaluation.

- Planning and management refers to creating a feasible evaluation with the plan, budget, resources, and timeline; deciding and monitoring a work plan through a coordinating/supervising process, safeguarding data, planning use for evaluation, documenting the evaluation and process, working with teams, using the appropriate technology, and working with stakeholders to build the evaluation; and presenting work in a timely matter.

- The Interpersonal domain refers to the evaluator having the people skills needed to work with diverse groups of stakeholders to conduct program evaluations and improve the professional practice. These people skills include communication skills to engage different perspectives; the ability to facilitate shared decision-making; building trust; awareness of and ability to deal with power imbalances among people involved in the program or organization impacting evaluation; cultural competence; and conflict management.

These competencies demonstrate the complexity of doing an evaluation well. This also shows that an Extension agent evaluating a program does not just need to know how to create the evaluation, collect data on it, and analyze and interpret it, but they must also be skilled in the other competencies. An Extension agent needs to have the ability to communicate about the evaluation to the community and stakeholders and the different methods and theories behind a quality evaluation. They must also make sure their evaluations are practical and used, by understanding the context impacting the evaluation. The AEA also has guiding principles to reflect their values for ethical conduct of evaluators, which include systematic inquiry, integrity, respect for people, common good and equity, and competence (AEA Guiding, n.d.). This means, for example, that many evaluations should have their instruments and procedures approved by the Institutional Review Board (IRB). See Gariton and Israel (2021) for clarification about when IRB approval is necessary.

To develop these competencies, it takes time, commitment, and motivation. Research has found that single trainings for Extension agents to conduct high-quality program evaluations to be ineffective (Arnold et al., 2008; Diaz et al., 2020). Competence in this area requires employers to provide ongoing proactive in-service training and opportunities to learn to develop and maintain these skills (Diaz et al., 2020), which can be quite expensive for Extension if they were to provide everyone this continuous training. When Extension agents have been surveyed, they reported a need for more training in evaluation skills, help planning an evaluation, working with data (collecting, analyzing, reporting), and sharing findings (Diaz et al., 2020; McClure et al., 2012; Narine & Ali, 2020). Agents also have stated needs for teamwork and evaluation leaders (Flack, 2019; Silliman et al., 2016). Recall that teamwork in evaluation is considered a principle of good evaluation by the AEA (AEA Guiding, n.d.). The question is, “Can all county faculty be trained and develop a deep understanding and expertise of evaluation to perform high-quality evaluations?” Lamm, Israel and colleagues (2011) think it should be a team-based model that trains individuals at the county level to lead the development of evaluations while the other county faculty attain a basic understanding of evaluation to help implement them. The ELT (Evaluation Leadership Team) model is a possible team-based solution for evaluation that can create a bridge of communicating impact of programs between state faculty and county faculty that does not exist now.

Evaluation Leadership Team (ELT) Model

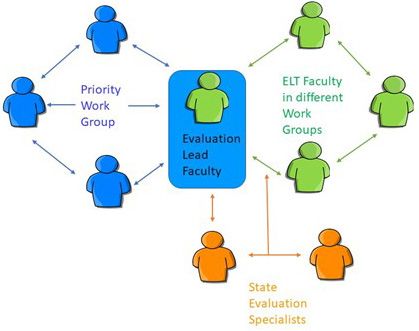

The structure suggested by the ELT model is a natural fit for Extension given its intended use of various types of teams (including goal teams, priority work groups, and action teams). Several EDIS publications have suggested county faculty work together in teams to alleviate the pressure and time commitment felt when striving to appropriately evaluate their programs (Israel et al., 2009; Lamm, Israel et al., 2011). It has also been shown that Extension agents want teamwork in evaluation (Flack, 2019) and that the needs for evaluation and competencies change for different areas or priority groups (Ghimire & Martin, 2013; McClure et al., 2012). The departure from current practice to the new model requires that county faculty and other faculty in a priority group designate an evaluation leader from within each statewide initiative and priority work group. The designated evaluation leaders would then work with state evaluation specialists to develop the competencies needed to create and conduct high-quality evaluations. By becoming a competent evaluator, the evaluation leader would then help coordinate and develop the evaluation tools and processes within their work group (Figure 1).

Launching the ELT model would require:

- Commitment from a group of county faculty to complete evaluation training, one or two individuals from each priority work group.

- A series of in-depth evaluation workshops (equivalent to a graduate-level college course).

- An administrative commitment to providing resources to ELT faculty/teams, including training, lower expectation of amount of reporting in their other tasks during training at least, evaluation data collection, data entry, analysis tools, locations to document and share evaluation, and using evaluations to improve programming.

- Incentives for county faculty to be ELT members.

The in-depth workshops might consist of an initial evaluation training lasting two or three days, followed by participation once a week for three to four months in a two-hour online session focused around a program-based evaluation project. The county faculty choosing to participate in the ELT that receive focused training will be recognized as Evaluation Leaders upon completion. The county ELT agents would be supported by state evaluation specialists and other UF faculty with relevant expertise, as well as their ELT peer group, whom they can contact on a regular basis to help with questions or concerns.

Specific Potential Benefits of Adopting ELT

Adoption of the ELT model would generally eliminate the need for all county faculty to be experts in evaluation. The development of rigorous evaluation plans would be handled by individuals prepared for that role while allowing the remaining team members to specialize in other aspects of programming. The specific benefits of the ELT model would be:

- Reducing pressure and time commitment of evaluation for all Extension agents, making it less of a burden and making it more of a focus for one person.

- Providing evaluators at the county level with the training and resources needed to conduct high-quality evaluations.

- Creating a group of evaluation leaders that state evaluation specialists can work with directly to address the specific needs of the groups they represent, thereby creating a bridge in the network.

- Helping data collection to be more consistent and thus able to be used for better understanding of programs’ impacts and to directly align with outcomes in Extension’s Workload reporting system. Shifting Extension to a more standardized reporting process and IT system would create a more streamlined way of assessing Extension as a whole, as well as for counties and regions.

- Creating a resource archive of surveys or forms that are ready for other Extension agents to use.

- Producing a primary contact (who has experience working as an agent) for other county faculty to get help with evaluation efforts that include Qualtrics questions, analysis of data, interpretation, and what to do with the evaluation.

- Allowing more time for other agents to develop and implement creative, high-quality educational programs for clientele.

- Creating more of an interdisciplinary team with ELT agent and work group and other ELT agents, bringing different perspectives to issues and concepts.

Ultimately, the goal of the ELT model is to enhance Extension's ability to conduct rigorous evaluations that demonstrate Extension's public value.

Key Challenges and Solutions

It may be difficult for people to view how to implement this model, so this section will identify possible solutions and opportunities to do so.

The first potential challenge is recruitment of the ELT members. State specialists, Extension administrators, district directors, and other administrators should emphasize the importance of this role and inform people about these opportunities. In addition, county faculty who have an interest in evaluation, who have a professional development project, or who are seeking an advanced degree might be well suited for the ELT.

The next challenge is workload concerns. County faculty might feel that becoming an ELT member would increase their workload. The work group, district directors, and state specialists should identify ways to decrease agents’ other programs or reduce other responsibilities to allow for evaluation development, implementation, analysis, and support of other agents. The work would also be able to be part of the agents ROA packet (with a situation, objectives, methods, and outcomes for their evaluation work) and would be rewarded in the promotion process.

The third challenge is incentives. Providing agents with certification (to add to a résumé), extra skills training for more job opportunities, bonus pay, and public expressions of appreciation by UF/IFAS leaders can help reward county faculty for stepping up as evaluation leaders.

The fourth challenge identified is evaluation’s value within the organizational culture. From the new agent orientation to in-service trainings, there should be a consistent message affirming the importance of evaluation, especially from administration (including Extension deans, associate deans, district directors, and other administrators). When surveyed in another state, evaluation lead county agents thought they needed more support from administration (Silliman, 2016). Administration needs to support and advocate for high-quality evaluation so that faculty will see this is an important part of Extension.

If administrators and Extension specialists do not fully understand evaluation, they should pursue training themselves. Leaders need to be able to talk about what evaluation does for Extension and county faculty members’ jobs. In addition, providing people with examples of evaluation success stories can demonstrate feasibility and encourage county faculty to aspire to the role. Because evaluation is expected, Extension leadership needs to provide information and support for creating the most effective programs to help clientele.

Even though there are some challenges in implementing this model, there are many ways to make the ELT model work to benefit and improve Extension.

Conclusions

The ELT model is a way to encourage evaluation efforts in Extension and promote teamwork. This model will encourage team members who are more inclined to evaluation to pursue this endeavor while freeing up time for other agents. It would also streamline the evaluation process, with the evaluation faculty conducting most of the evaluations, giving other agents a resource to contact, and setting up a system for evaluation faculty to know who to contact for extra help. This will also make it easier to compare programs from county to county, which will make Extension’s results more transparent to the public and funding agencies. There may be some challenges to implementing this model, but if these can be worked through by county and state faculty, and supported by administrators, this strategy could improve evaluation and accountability.

References

AEA Evaluator Competencies. (n.d.). Retrieved June 30, 2021, from https://www.eval.org/About/Competencies-Standards/AEA-Evaluator-Competencies

AEA Guiding Principles. (n.d.). Retrieved June 30, 2021, from https://www.eval.org/About/Guiding-Principles

Arnold, M. E., Calvert, M. C., Cater, M. D., Evans, W., LeMenestrel, S., Silliman, B., & Walahoski, J. S. (2008). Evaluating for impact: Educational content for professional development. Washington, DC: National 4-H Learning Priorities Project, Cooperative State Research, Education & Extension Service, USDA.

Diaz, J. M., Jayaratne, K. S. U., & Kumar Chaudhary, A. (2020). Evaluation competencies and challenges faced by early career Extension professionals: Developing a competency model through consensus building. Journal of Agricultural Education and Extension, 26(2), 183–201. https://doi.org/10.1080/1389224X.2019.1671204

Flack, J. A. (2019). Factors influencing program impact evaluation in cooperative extension [Ph.D., North Dakota State University]. https://www.proquest.com/docview/2364043600/abstract/A1A8ECA063CD4F31PQ/1

Gariton, C. E., & Israel, G. D. (2021). The Savvy Survey #9: Gaining institutional review board approval for surveys. EDIS, 2021(3). https://doi.org/10.32473/edis-PD084-2021

Ghimire, N. R., & Martin, R. A. (2013). Does evaluation competence of extension educators differ by their program area of responsibility? Journal of Extension, 51(6). https://archives.joe.org/joe/2013december/rb1.php

Israel, G. D., Diehl, D., & Galindo-Gonzalez, S. (2009). Evaluation situations, stakeholders & strategies. EDIS, 2010(1). https://journals.flvc.org/edis/article/view/118267

Kluchinski, D. (2014). Evaluation behaviors, skills and needs of Cooperative Extension agricultural and resource management field faculty and staff in New Jersey. Journal of the NACAA, 7(1).

Lamm, A. J., Harder, A., Israel, G. D., and Diehl, D. (2011). Team-based evaluation of Extension programs. EDIS, 2011(8). https://doi.org/10.32473/edis-wc118-2011

Lamm, A. J., Israel, G. D., Diehl, D., & Harder, A. (2011). Evaluating Extension programs. EDIS, 2011(5/6). https://journals.flvc.org/edis/article/view/119231

McClure, M. M., Fuhrman, N. E., & Morgan, A. C. (2012). Program evaluation competencies of extension professionals: Implications for continuing professional development. Journal of Agricultural Education, 53(4), 85–97. https://doi.org/10.5032/jae.2012.04085

Narine, L. K., & Ali, A. D. (2020). Assessing priority competencies for evaluation capacity building in Extension. Journal of Human Sciences and Extension, 8(3), 58–73. https://www.jhseonline.com/article/view/919

Silliman, B., Crinion, P., & Archibald, T. (2016). Evaluation champions: What they need and where they fit in organizational learning. Journal of Human Sciences and Extension, 4(3), 22–45. https://www.jhseonline.com/article/view/756