Abstract

This eighth publication in the Conducting the Needs Assessment series provides Extension professionals and other service providers with directions for how to use the Borich model to collect data about the training needs of a target audience and how to use that data to improve programming.

For a complete list of the publications in this series, refer to the overview of the Conducting a Needs Assessment series in Appendix A.

Introduction

The Borich model (Borich, 1980) was designed to determine for which competencies training is needed for a target audience. Originally intended for use in identifying the training needs of teachers, the Borich model has been widely adapted and used with a variety of audiences. Five primary steps for using a Borich model are described for use in an Extension context in this publication: (a) determine which competencies to assess, (b) participant self-assessment, (c) rank training needs, (d) evaluate current programming, and (e) revise program or competency expectations as needed.

Step 1: Determine Which Competencies to Assess

Competencies are the knowledge, skills, and attitudes needed to be successful (Iqbal et al., 2017; McClelland, 1973). In Extension, competencies have been identified for audiences such as Extension leaders (Atiles, 2019), entry-level Extension educators (Scheer et al., 2011), and specifically UF/IFAS Extension faculty (Harder, 2015). However, competencies can also be applied to describe the desired knowledge, skills, and attitudes of Extension audiences, such as Master Gardeners, 4-H volunteers, agricultural producers, and homeowners. Borich (1980) advised that a list of competencies should be developed using past studies of effectiveness (e.g., the competencies needed for a volunteer to be effective or for a community leader to be effective) or from important program learning objectives. All competency statements should be checked against program activities to ensure the chosen competencies are relevant (Borich, 1980).

Competency statements often include multiple related elements. However, to prevent confusion, competency statements should not be double-barreled (more than one skill per statement) when included in a survey instrument (Dillman et al., 2014). For example, able to plan and evaluate programs as a competency should be split into able to plan programs and able to evaluate programs. The chosen competency statements should be combined into a single survey instrument (Borich, 1980). For more information on developing surveys, please see the Savvy Survey series on EDIS.

Step 2: Participant Self-Assessment

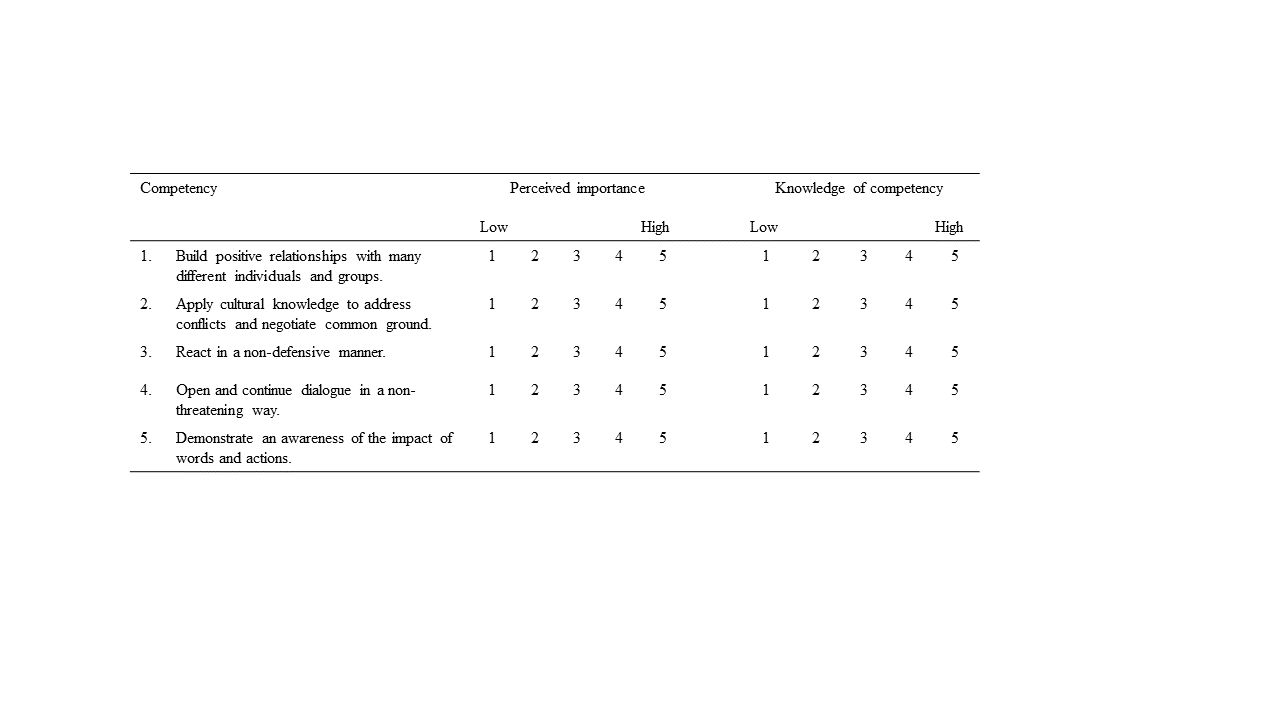

A survey with items measured on an ordinal scale (i.e., low to high) should be developed using the selected competency statements. Participants should have the ability to indicate their perceived importance of competencies along with their perceived level of attainment (Borich, 1980) (see Figure 1). This approach allows participants’ perceptions of the importance of a competency to be compared with their perceived skill attainment (Borich, 1980), which is useful for determining if participants need additional training to boost their skill levels. The difference between the two ratings is known as a discrepancy score (Borich, 1980). Some Extension needs assessments conducted with the Borich model have used Likert scales (Benge et al., 2020; Connor et al., 2018), a more specific form of an ordinal scale measuring the extent to which participants disagree or agree with various competency statements.

Credit: Adapted from “Priority Competencies Needed by UF/IFAS Extension County Faculty,” by A. Harder, 2015, EDIS, AEC574 (https://edis.ifas.ufl.edu/publication/WC236).

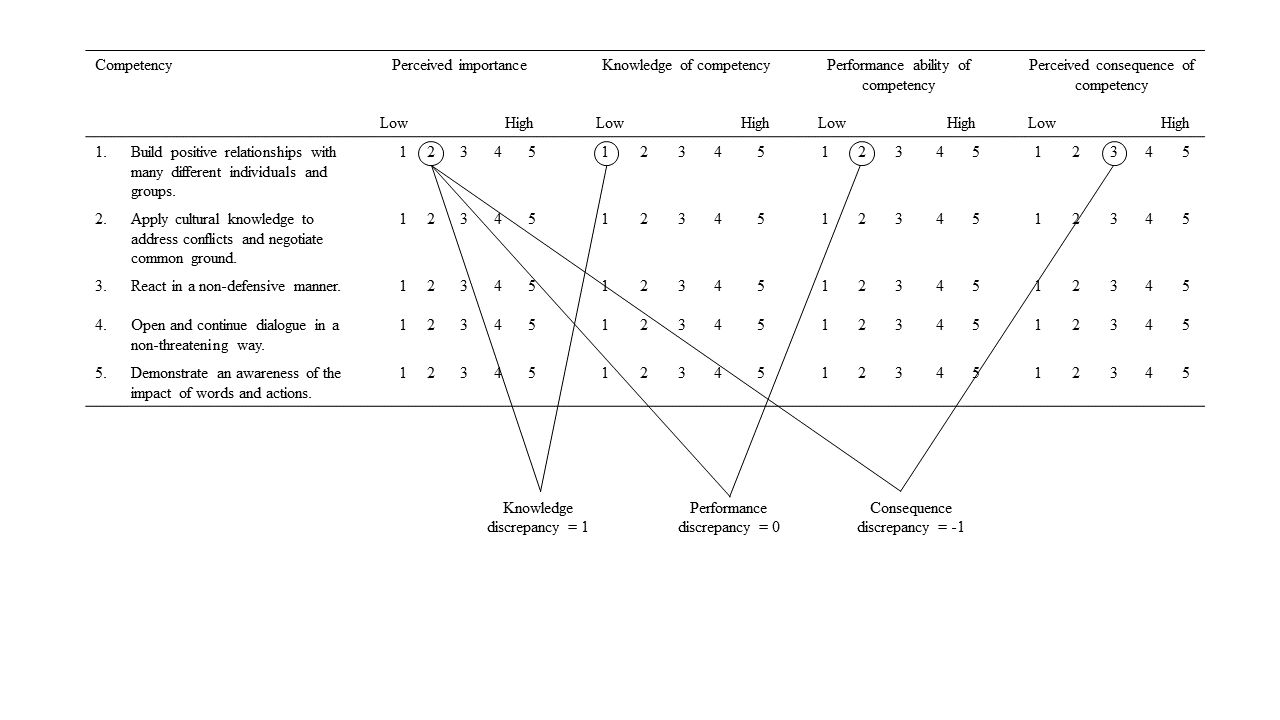

Sometimes, it is useful to separate perceptions of skill attainment into specific areas, such as (a) knowledge, (b) performance, and (c) consequence (Borich, 1980). Define the terms for participants completing the survey. Knowledge is the ability to recall information either through written or spoken communication, whereas performance is the ability to perform a specific behavior (in real or simulated environment) with an observer present (Borich, 1980). Last, consequence describes an individual’s ability to elicit change among learners by using the competency (Borich, 1980). Comparing the three types of skill attainment to perceived importance results in three discrepancy scores: (a) knowledge, (b) performance, and (c) consequence (Borich, 1980) (see Figure 2). The discrepancy scores can help you determine if your participants lack information about the competency, if they lack the ability to perform the competency, or if they need to learn more about the consequence of using the competency. Educational programs can be tailored and improved based on addressing these discrepancies. In practice, Extension assessments have often focused only on knowledge (Gunn & Loy, 2015; Krimsky et al., 2014; Lelekacs et al., 2016) or performance (Bayer & Fischer, 2021; Roback, 2017), rather than all three components of attainment.

Credit: Adapted from “Priority Competencies Needed by UF/IFAS Extension County Faculty,” by A. Harder, 2015, EDIS, AEC574 (https://edis.ifas.ufl.edu/publication/WC236).

Step 3: Rank Training Needs

Calculating discrepancy scores offers insight about a single participant’s perceptions. However, the Borich model also takes into consideration the judgment of the participant group to overcome any individual’s errors in judgment (Borich, 1980). This is done through the calculation of a mean weighted discrepancy score (MWDS), as shown in Table 1. First, the difference between the perceived importance and level of attainment (the discrepancy score) should be calculated for each competency. Then, a weighted discrepancy score will need to be calculated for each participant by multiplying the participant’s discrepancy score by average perceived importance for that competency across all participants (Borich, 1980). Finally, the MWDS should be calculated by summing the weighted discrepancy scores for the competency and dividing by the number of responses.

Table 1. Example of a Spreadsheet with Borich Model Data and Calculations.

Compare the MWDS for the competencies that were assessed to determine which ones should be focused on with improved or additional programming. Borich model assessments designed using 5-point ordinal scales, like the examples in Figures 1 and 2, will have possible MWDS scores ranging from -4 to 20 (Narine & Harder, 2021).Larger MWDS indicate bigger gaps between importance and training across the group surveyed, and greater needs for training. Competencies with the largest MWDS should be interpreted as the highest priorities for training.

Step 4: Evaluate Current Programming

Borich (1980) explained that when a competency is highly valued as important, yet participants have low attainment, it may be an indicator of “insufficient rather than ineffective training” (p. 41). Competencies differ in terms of complexity. Some competencies may be relatively easy to gain knowledge about or learn how to perform, such as learning how to calibrate a home sprinkler system. Other competencies, like managing conflict, can be more complex and will likely require more intensive programming. You should evaluate whether the observed training needs are due to a need to provide more training or if the current training is not covering what it should for competency attainment goals to be accomplished. This is also a good time to consider if the appropriate instructors are involved in the program and how their skill as educators may influence competency development outcomes.

Step 5: Revise Program or Competency Expectations as Needed

Program providers should examine instructional resources dedicated to the competencies assessed and reallocate training materials to high-priority competencies (Borich, 1980). However, improving high-priority areas may not be cost-effective, and partnering with others who can help provide necessary training to improve the proper level of attainment may be appropriate. If no viable options exist for providing programming that leads to the desired attainment of competencies, then expectations should be revised to reflect reality (Borich, 1980).

Summary

The Borich model (Borich, 1980) can be used to determine competency training needs for a target audience and was described using five primary steps. Results from a Borich model needs assessment should guide training to address competencies with the highest discrepancy scores or expectations should be adjusted to reflect realistic attainment of competencies given available resources.

References

Atiles, J. H. (2019). Cooperative Extension competencies for the community engagement professional. Journal of Higher Education, 23(1). https://openjournals.libs.uga.edu/jheoe/article/view/1431

Bayer, R., & Fischer, K. (2021). Correlating project learning tree to 4-H life skills: Connections and implications. Journal of Extension, 57(5), Article 27. https://tigerprints.clemson.edu/joe/vol57/iss5/27/

Benge, M., Martini, X., Diepenbrock, L. M., & Smith, H. A. (2020). Determining the professional development needs of Florida integrated pest management Extension agents. Journal of Extension, 58(6), Article 15. https://tigerprints.clemson.edu/joe/vol58/iss6/15/

Borich, G. D. (1980). A needs assessment model for conducting follow-up studies. Journal of Teacher Education, 31(3), 39–42. https://doi.org/10.1177/002248718003100310

Connor, N. W., Dev, D., & Krause, K. (2018). Needs assessment for informing Extension professional development trainings on teaching adult learners. Journal of Extension, 56(3), Article 25. https://tigerprints.clemson.edu/joe/vol56/iss3/25/

Dillman, D. A., Smyth, J. D., & Christian, L. M. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method (4th ed.). John Wiley & Sons.

Gunn, P., & Loy, D. (2015). Use of interactive electronic audience response tools (clickers) to evaluate knowledge gained in Extension programming. Journal of Extension, 53(6), Article 24. https://tigerprints.clemson.edu/joe/vol53/iss6/24/

Harder, A. (2015). Priority competencies needed by UF/IFAS Extension county faculty. EDIS, 2015(5). https://edis.ifas.ufl.edu/publication/wc236

Iqbal, Z. M., Malik, A. S., & Khan, R. A. (2012). Answering the journalistic six on the training needs assessment of pharmaceutical sales representatives. International Journal of Pharmaceutical and Healthcare Marketing, 6(1), 71–96. https://www.doi.org/10.1108/17506121211216914

Krimsky, L., Adams, C., & Fluech, B. (2014). Seafood knowledge, perceptions, and use patterns in Florida: Findings from a 2013 survey of Florida residents. EDIS, 2015(4). https://edis.ifas.ufl.edu/publication/FE965

Lelekacs, J. M., Bloom, J. D., Jayaratne, K. S. U., Leach, B., Wymore, T., & Mitchel, C. (2016). Planning, delivering, and evaluating an Extension in-service training program for developing local food systems: Lessons learned. Journal of Human Sciences and Extension, 4(2). https://www.jhseonline.com/article/view/692

McClelland, D. C. (1973). Testing for competence rather than intelligence. American Psychologist, 28, 1–14. https://doi.org/10.1037/h0034092

Narine, L., & Harder, A. (2021). Comparing the Borich model with the ranked discrepancy model for competency assessment: A novel approach. Advancements in Agricultural Development, 2(3), 96–11. https://doi.org/10.37433/aad.v2i3.169

Roback, P. (2017). Using real colors to transform organizational culture. Journal of Extension, 55(6), Article 33. https://tigerprints.clemson.edu/joe/vol55/iss6/33/

Sanagorski, L. (2014). Using prompts in Extension: A Social marketing strategy for encouraging behavior change. Journal of Extension, 52(2), Article 33. https://tigerprints.clemson.edu/joe/vol52/iss2/33/

Scheer, S. D., Cochran, G. R., Harder, A., & Place, N. T. (2011). Competency modeling in Extension education: Integrating an academic Extension education model with an Extension human resource management model. Journal of Agricultural Education, 52(3), 64–74. https://doi.org/10.5032/jae.2011.03064

Appendix A: Conducting the Needs Assessment Series Overview

Conducting the Needs Assessment #1: Introduction

General summary of needs assessments, including what a needs assessment is, the different phases, and tools to conduct a needs assessment.

Conducting the Needs Assessment #2: Using Needs Assessments in Extension Programming

Overview of using needs assessments as part of the Extension program planning process.

Conducting the Needs Assessment #3: Motivations, Barriers, and Objections

Information about the motivations, barriers, and objections to conducting needs assessments for Extension professionals and service providers.

Conducting the Needs Assessment #4: Audience Motivations, Barriers, and Objections

Information about the motivations, barriers, and objections that clientele and communities may have for participating or buying into a needs assessment.

Conducting the Needs Assessment #5: Phase 1—Pre-assessment

Introduction to the Pre-assessment phase of conducting a needs assessment, including defining the purpose, management, identifying existing information, and determining the appropriate methods.

Conducting the Needs Assessment #6: Phase 2—Assessment

Introduction to the Assessment phase of conducting a needs assessment, including gathering and analyzing all data.

Conducting the Needs Assessment #7: Phase 3—Post-assessment

Introduction to the Post-assessment phase of conducting a needs assessment, including setting priorities, considering solutions, communicating results, and evaluating the needs assessment.

Conducting the Needs Assessment #8: The Borich Model

Overview of using the Borich Model to conduct a needs assessment.

Conducting the Needs Assessment #9: The Nominal Group Technique

Overview of using the Nominal Group Technique to conduct a needs assessment.

Conducting the Needs Assessment #10: The Delphi Technique

Overview of using the Delphi Technique to conduct a needs assessment.

Conducting the Needs Assessment #11: The Causal Analysis Technique

Overview of using the Causal Analysis Technique to conduct a needs assessment.