Ripple Effects Mapping (REM) is a qualitative evaluation approach that characterizes the effects or “ripples” of program impacts by collecting stories from program participants and stakeholders in a guided group discussion format. REM is a relatively new method for Extension program evaluation, which actively involves program participants, documents their stories, and renews momentum for Extension programs. It is designed to capture unanticipated and “downstream” program effects that otherwise go unnoticed.

This Ask IFAS publication introduces Extension agents to REM, encourages them to continue exploring the methodology, and promotes collaboration with agents currently using it to learn more about ways to apply it to their own work. REM enables stakeholders, program participants, Extension agents, and decision makers to understand the complex and indirect outcomes that emerge from Extension education initiatives. This guide will explain the four key components of REM, describe the steps to conduct REM for Extension program evaluation, and demonstrate a real-life example of the use of REM in an Extension program.

The Four Key Components of Ripple Effects Mapping

1. Appreciative Inquiry

REM uses group discussions to collect evaluation data. These sessions start with participants interviewing each other using appreciative inquiry questions to identify the best things associated with the program. Appreciative inquiry is defined as a positive approach that focuses on the program’s strengths wherein participants recount the good or helpful things that came out of the group’s efforts. The insights generated from appreciative inquiry can then be used to make program innovations in the future (Coghlan et al., 2003). By carefully developing the discussion questions, the appreciative inquiry approach primes participants to reflect on positive experiences from the Extension program. By highlighting and recording these positive outcomes, program participants can collaboratively build on these experiences while planning to move toward a desired future. Examples of appreciative inquiry discussion questions include the following:

- “What new opportunities or resources have come about from this collaboration?”

- “Is there anything you are especially proud of?”

- “Can you share a story of some key benefits that have resulted from the program?”

2. Participatory Approach

The participatory approach aims to shift decision making power from the researchers to the program participants. Ideally, REM participants actively influence the evaluation process, and their perspectives are centered, rather than those of the researcher. The depth of participation and the degree to which decision making is determined by participants may vary, but in REM, stakeholders, especially program staff, have many opportunities to shape the evaluation. Key stakeholders can collaborate with the lead evaluator to determine who should participate, design the REM group session, write the appreciative inquiry questions, help interpret the data, and give feedback on the coding. This participatory approach increases the likelihood that evaluation results will be useful for stakeholders (Chazdon et al., 2017).

3. Interactive Group Interviewing

In REM, program participants and stakeholders gather for interactive group interviewing and reflection in which they describe experiences and outcomes in their own words without the constraints of close-ended questions informed by evaluator assumptions or predetermined program objectives. This allows participants to define the areas of importance and work together to identify program effects, often generating new knowledge and identifying unanticipated areas of impact, influence, or correlation. This process, which takes place in a large group of program participants, includes steps such as interviewing in pairs, reporting to the entire group, and reflection and comments. The reporting and discussion are recorded in writing for the group to see, and participants are encouraged to reflect on the results and add further comments. This approach combines both intrapersonal reflection and interpersonal dynamics to give participants a greater understanding of the program and generate ideas and excitement to move forward (Chazdon et al., 2017).

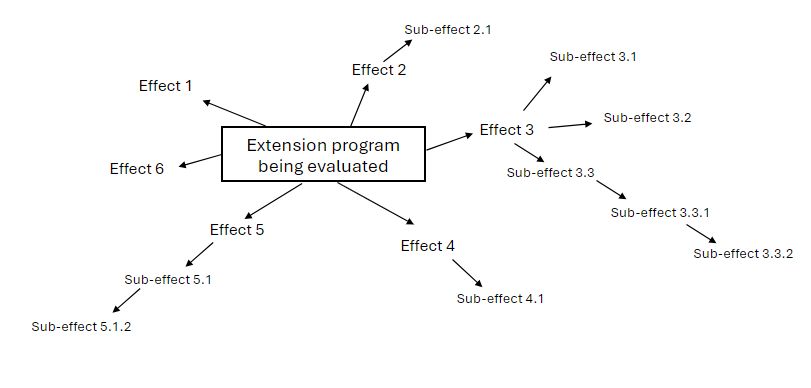

4. Radiant Thinking

REM employs “radiant thinking.” This is a visual representation of the group interview discussion in a “mind map,” which demonstrates “causally linked chains of effects” and allows participants to view the big picture of program outcomes and specific details simultaneously (Chazdon et al., 2017, p. 15). For in-person meetings, the map is drawn on a large sheet of butcher paper taped to a wall where all participants can see it. For online meetings, mind mapping software (such as Xmind or MindMeister) can be used. During the group data collection session, as participants share their stories, the “mapper” on the evaluation team records the stories on the paper/software to create a mind map in real time. Each story radiates out from a central point on the map meant to represent the Extension program, and ideally, the story sequence follows chronological and causal order according to the participant’s account (Figure 1). The facilitator can help encourage storytellers to pay attention to causal linkages by asking prompting questions to move forward (“What happened after that?”) or backward (“What caused/led to that?”). The mapper documents the chain of events and outcomes accordingly (Chazdon et al., 2017).

Credit: Kelly Wilson, UF/IFAS

How to Implement Ripple Effects Mapping

Step 1: Convene the evaluation team.

It is important to have representative stakeholders present to participate in the REM process, and this begins with the evaluation team or steering committee. Before starting the REM process, gather a small team of key participants and strategic collaborators to guide recruitment, design the research instrument (appreciative inquiry questions), and plan for the logistics of the meeting(s). As a participatory evaluation, these key individuals should be included in the evaluation planning, implementation, analysis, and report development. Depending on the program's nature, these people may be program participants, community members, educators, and/or funding agency representatives. Consider inviting Extension colleagues in your county or region to collaborate on the evaluation, especially those with prior experience in REM. Keep in mind that not everyone who may be relevant to the program or helpful in guiding the REM process may be available. Always be conscious of “who is not here but should be” and try to include them in some way or at least get their feedback.

Step 2: Plan the data collection session and gather the critical stakeholder groups that participated in the program.

Next, plan and schedule the data collection session(s) with the evaluation team. This involves developing generative, appreciative inquiry questions, delegating roles for each of the evaluation team members to take on during the data collection sessions and data analysis (described in steps 3 and 4, respectively), selecting a central location at which to meet, crafting both a process agenda and participant-facing agenda for the events, and identifying all relevant stakeholders to invite. The planning stage is also an important time to determine the degree of participant involvement and influence in the evaluation. Consider what role program staff and stakeholders will play in each step of the process. Ask whether and to what degree program participants will develop questions and agendas, facilitate data collection, analyze data or provide input on data analysis, and craft final reports. Be transparent with participants about what you expect from them and what they can expect from you.

To create a comprehensive invitee list, brainstorm all the critical stakeholder groups related to the program. Be sure to consider any individuals who would provide helpful insights and rich stories to the REM evaluation. These groups and individuals should include both direct program participants and non-participant stakeholders. A group of 12–20 participants per session is ideal (Chazdon et al., 2017). Depending on stakeholder numbers, consider planning multiple data collection sessions with both in-person and virtual options to maximize participation while maintaining the 12- to 20-person group size. Create registration forms for participants and use an invitation and registration tracking spreadsheet to communicate progress and areas for needed follow-up among the evaluation team. Strategically delegate evaluation team members to invite individuals with whom they already have close relationships. Consider offering appropriate incentives for participation, which can include food, professional development opportunities, or organizational credit.

Step 3: Conduct the REM data collection session and create the visual map.

Having a detailed meeting process agenda with step-by-step objectives, delegated roles, activities, and supplies enhances the group meeting and facilitates good participation. The core principles of appreciative inquiry and a participatory approach are embodied in the REM data collection session through the interactive group interview. To enhance the group dynamic, arrange tables and chairs to face one another in a circle or semicircle with the flipchart paper or computer screen at the front so that everyone can see where the mind map will be displayed on the wall.

Follow the process agenda to conduct the session. After an initial welcome, discuss the evaluation goals and nature of the REM approach. Split the group into pairs to conduct peer interviewing with the appreciative inquiry discussion questions for 10–15 minutes. Pair individuals with those they are less familiar with to foster additional connections between stakeholders.

Next, reconvene the group for facilitated story sharing and discussion for about an hour. During this time, the facilitator guides the group, keeping everyone on track and asking prompting questions to elicit more information, including additional details about the narrative accounts and insights regarding causal contributions or a series of events that led to the identified outcome(s). A facilitator must have the skills to ensure equitable participation, keep time, engage participants, and discern which lines of inquiry are worth pursuing. A “mapper” records the stories from the participants on a large sheet of paper in the front of the room as a mind map (or in an online mind mapping software in virtual settings). The mapper should seek to document these stories to show the sequential, snowballing outcomes from initial program events or activities. The identified “ripple effects” often extend beyond the intended scope of the program. On the map, connect the initial outcomes, activities, and ripple effects, tracing the pathways from the program activities to the broader impacts. This step helps visualize the cause-and-effect relationships between different elements of the Extension program.

To close the session, facilitate a reflective discussion with stakeholders to explore the significance and implications of the ripple effects. Encourage participants to share their thoughts on unexpected outcomes and opportunities for further development. Example discussion questions could include the following.

- “Which outcomes strike you as most significant?”

- “As you review our mind map and reflect on what you heard, what surprises you?”

- “What ideas do you have to channel our momentum and build on these achievements for even more impact?”

Throughout the session, a notetaker transcribes the dialogue to record additional details not captured in the initial mind map and to create a backup narrative that can be compared to the map for validation and future story sharing. The session may also be audio- or video-recorded with participants’ consent.

Step 4: Compile and analyze data.

Transcribe paper maps in an online mind mapping software, such as Xmind or MindMeister. These platforms are equipped with protections for data security and offer both free versions and various pricing plans that include additional features. Compare the data points and story threads on the map to the session transcripts to ensure that all stories are included and that story threads are accurate. As you compare the story threads on the map to the transcript, pay attention to causation and story sequence. Double-check to be sure that the story threads are sequentially ordered according to logical causal linkages based on what the participant shared. Sometimes participants tell stories out of order (e.g., stating first the final outcome and backfilling with the details of how it came about). The mapper may have written down the first point the participant shared as the first bubble of the map, but it should actually come last, after the details are recounted; the transcript review allows this to be corrected. Then, combine maps from multiple sessions into a single map for the whole program evaluation, grouping stories together by theme.

Export the digital map data to a spreadsheet for coding. Code the data from the visual map to identify common themes and patterns in the ripple effects; refer to the discussion transcript as needed to clarify any points of confusion. Analyze the data to understand the overarching impact of the Extension education program on the community.

Like most qualitative data analysis, REM requires at least two coders to analyze the statements documented on the map and verified by the transcript. Coders can assess the data independently, then meet to compare codes and discuss any differences before reaching consensus. While there is often no “right” way to code any given data point, consistency in rationale for coding decisions across the dataset, fidelity to the construct definitions in the chosen theoretical framework (if using one for closed coding), and reasonable justification for those decisions are the goals in data analysis. They are important for reaching consensus among the multiple coders.

Step 5: Report and utilize findings.

A comprehensive report should detail the outcomes of the REM evaluation to share the findings with all stakeholders, including funders, program participants, and community members. Use the results to improve the Extension education program and inform future program planning. The report should highlight specific stories that the participants identified as significant and describe the key areas of program success. It should identify the areas where program outcomes could be leveraged for additional impact and any gaps or areas where outcomes are limited.

Conclusion

REM is a valuable approach that is appropriate for Extension program evaluation. It holds the potential to go beyond traditional surveys and to document valuable information while meaningfully engaging and energizing stakeholders in the process. This is useful for understanding far-reaching and unanticipated program outcomes and developing effective communications that demonstrate the program’s value in participants’ own words. This participatory discovery process can also serve as a first step in “generative program planning,” which builds on what is best about the program and identifies gaps in community capitals.

Additional Resources

To learn more about REM and begin applying this method to your work, explore the resources below.

- A Field Guide to Ripple Effects Mapping (Chazdon et al., 2017): A comprehensive guide for conducting REM that includes multiple case study applications.

- REM Studio (https://remstudio.org/): A collaboration of national Extension agents whose website houses resources for conducting REM evaluation. They also offer services such as coaching, facilitation, and training on REM.

- UF/IFAS Extension Community Development Team: Microsoft Teams Link — An online group housed on Microsoft Teams managed by UF/IFAS Community Development Extension agents. The group houses informational resources and a space for collaboration among UF/IFAS Extension personnel.

References

Chazdon, S., Emery, M., Hansen, D., Higgins, L., & Sero, R. (2017). A Field Guide to Ripple Effects Mapping. https://conservancy.umn.edu/handle/11299/190639

Coghlan, A. T., Preskill, H., & Tzavaras Catsambas, T. (2003). An overview of appreciative inquiry in evaluation. New Directions for Evaluation, 2003(100), 5–22. https://doi.org/10.1002/EV.96

Appendix A. F2S REM process agenda for in-person session.

REM | F2S Program

April 20, 2023

5:00 p.m. to 7:30 p.m.

School District Conference Room